Everybody has run, at some point, some form of shell pipeline:

sort file.txt | grep foo

and so on. Now, how exactly shells implement that varies from shell to shell. The POSIX standard is a bit vague on the subject:

...each command of a multi-command pipeline is in a subshell environment; as an extension, however, any or all commands in a pipeline may be executed in the current environment.

which in practice seems to imply that each shell can implement pipelines putting none, one or any number of processes in their own subshells. And that is indeed what happens; there are well-known differences in implementations between shells.

Usually, what matters is whether the last element of a pipeline is run in a subshell or in the current shell. A blatant example of this, and one that often bites people, is using a while loop as follows:

count=0 somecommand | while IFS= read -r line; do count=$((count + 1)) ... done echo "Count is $count" # this prints 0 in most shells

Here we have a pipeline, and if the shell runs the last element of the pipeline (the while loop here) in a subshell, any variable set there does not affect the parent, and when "count" is printed at the end, it will still be 0 because here we are back in the parent. Here's another example that does not work as expected in shells that run the last part of a pipeline in a subshell:

echo 200 | read num

Here, if "num" is printed, it will have whatever value it had before the pipeline. Of the most popular shells, bash runs the last element of a pipeline in a subshell; ksh runs it in the current shell. Good shell programming should not depend on what the shell does. (As a matter of fact, in a second I'm going to present some code that is heavily dependent on what the shell does in those cases; it was part of a quick and dirty hack, so good practices probably don't matter too much there.)

For shells like bash that run the last part in a subshell, there are workarounds: see this page for more information.

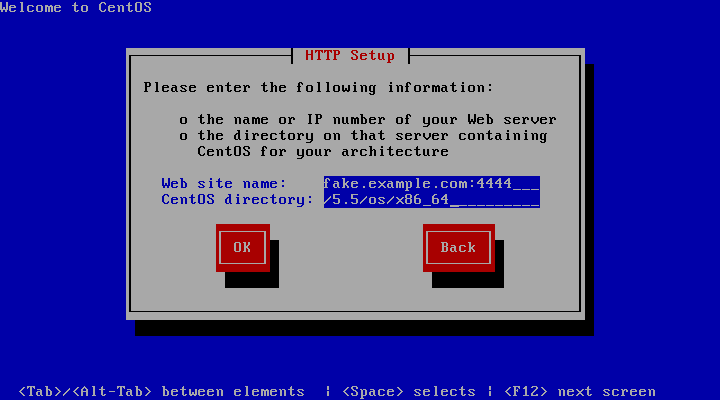

Now here's another, more subtle example that is affected by the behavior that I just described. In the CentOS network install behind a proxy article, I use a carefully crafted shell script that is run by socat, mentioning that it MUST be written that way otherwise it won't work. Here is the script again (for simplicity, using netcat instead of socat to connect to the proxy):

#!/bin/bash

# mangle.sh: invoke as "mangle.sh <mirror name> <proxy_address> <proxy_port>"

mirror=$1

proxy_addr=$2

proxy_port=$3

{ nc "$proxy_addr" "$proxy_port" && exec 1>&- ; } < <(

gawk -v mirror="$mirror" '/^Host: /{$0="Host: " mirror "\r"}

/^GET /{$2="http://" mirror "/" $2}

{print; fflush("")}' )

Here is the history of this script. The very first version was as follows:

#!/bin/sh

# mangle.sh: invoke as "mangle.sh <mirror name> <proxy_address> <proxy_port>"

mirror=$1

proxy_addr=$2

proxy_port=$3

gawk -v mirror="$mirror" '/^Host: /{$0="Host: " mirror "\r"}

/^GET /{$2="http://" mirror "/" $2}

{print; fflush("")}' | nc "$proxy_addr" "$proxy_port"

(note the shell is /bin/sh, not bash)

This was not working. The client sent the request, it was correctly mangled by the script, the server received it and sent back the reply, which socat relied back to the client, but after that the client seemed to hang. Some investigation showed that the server was indeed closing the connection after sending all the data, but the EOF was not being propagated back to the client (which would unblock it). Further investigation revealed that nc was indeed detecting the server close, and terminating; so why did socat seem to be unaware of it?

Let's revisit how the above script was invoked by socat:

socat TCP-L:4444 EXEC:"./mangle.sh centos.cict.fr localproxy.example.com 8080"

With EXEC, socat runs the specified program connecting its standard input and output back to itself, so it can send/receive data to the program. Since this is a shell script, what is started is effectively a shell, with its standard input and output connected to socat. But the script contains a pipeline, so the shell spawns other processes (purposely vague for now) to run the various parts of the pipeline. All these processes inherit the parent's descriptors, so these processes start out with their standard inputs and outputs connected to socat. Then, gawk's stdout and nc's stdin are further redirected to the pipe connecting them. But gawk's stdin and nc's stdout remain connected to the external socat.

So when nc terminates, socat should detect EOF on that channel, right?

Wrong! Remember, there is another process that is sharing the same channel, and that is the initial shell that was spawned by socat to run the script. When a file descriptor is shared, it's not considered closed until all its open instances are closed! This means that, for socat to detect EOF on that channel, both nc and the parent shell must close it; it is not enough if only nc terminates. This is perhaps obvious, and I do know that all the descriptors must be closed; only, in this case, I was not considering the full picture.

Once I realized this, I immediately saw the solution (or, that's what I thought):

#!/bin/sh

# mangle.sh: invoke as "mangle.sh <mirror name> <proxy_address> <proxy_port>"

mirror=$1

proxy_addr=$2

proxy_port=$3

gawk -v mirror="$mirror" '/^Host: /{$0="Host: " mirror "\r"}

/^GET /{$2="http://" mirror "/" $2}

{print; fflush("")}' | { nc "$proxy_addr" "$proxy_port" && exec 1>&- ; }

That is, once nc terminates, close fd 1 in the shell too. But this, again, was NOT working! Why not? Because /bin/sh on that system is bash, and bash runs the last element of a pipeline in a subshell! So I was closing fd 1 in that subshell, leaving the parent unaffected.

Alright. So we need to have this part:

{ nc "$proxy_addr" "$proxy_port" && exec 1>&- ; }

run in the context of the main shell. With bash, one can use process substitution and do this:

#!/bin/bash

# mangle.sh: invoke as "mangle.sh <mirror name> <proxy_address> <proxy_port>"

mirror=$1

proxy_addr=$2

proxy_port=$3

{ nc "$proxy_addr" "$proxy_port" && exec 1>&- ; } < <(

gawk -v mirror="$mirror" '/^Host: /{$0="Host: " mirror "\r"}

/^GET /{$2="http://" mirror "/" $2}

{print; fflush("")}' )

which is the final (working) version. Yes, gawk still runs in a subshell, but it doesn't matter here. What matters is that after nc terminates because it gets EOF from the proxy, file descriptor 1 is closed in the context of the current shell; once that happens, all the instances are closed and socat DOES see EOF from the script, propagates it back to the client, and everything works.

Note that, with a shell that runs the last element of a pipeline in the context of the current shell (eg ksh), the penultimate version should work too.

Thanks to Gerhard Rieger who with his hints made me realize that what I initially saw as a problem in socat was instead due to my incomplete understanding of what was going on.

Update 19/02/2012: Recent versions of Bash introduced an option that allows the last command in a pipeline to be executed in the current shell, not in a subshell: lastpipe (enabled with shopt -s lastpipe). Here's the description from the manual page: "If set, and job control is not active, the shell runs the last command of a pipeline not executed in the background in the current shell environment."