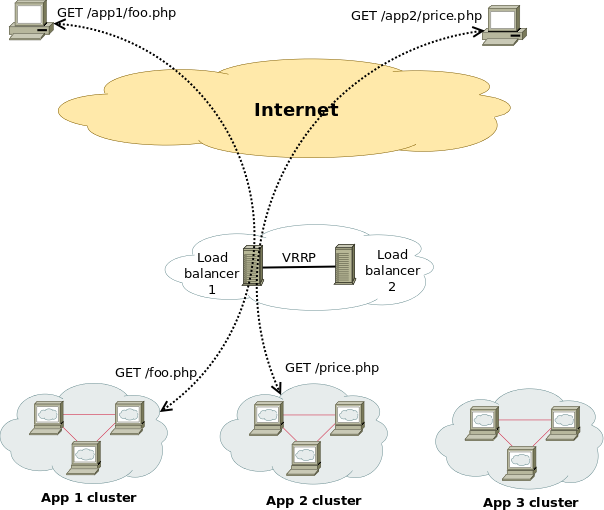

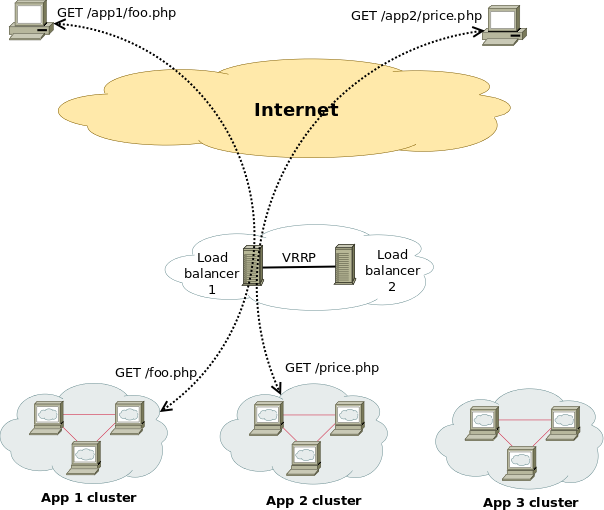

Let's imagine a situation where, for whatever reason, we have a number of web applications available for users, and we want users to access them using, for example,

https://example.com/appname/

Each application is served by a number of backend servers, so we want some sort of load balancing. Each backend server is not aware of the clustering, and expects requests relative to /, not /appname. Also, SSL connections and caching are needed. The following picture illustrates the situation:

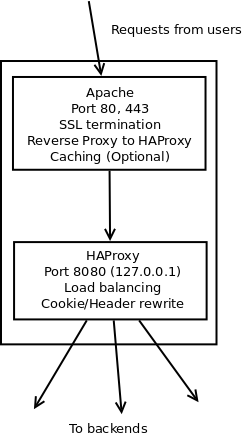

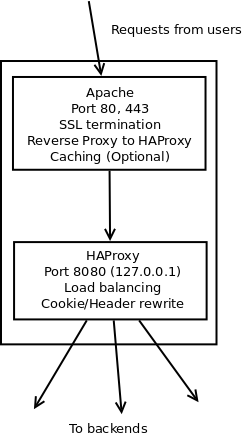

Here is an example of how to implement such a setup using Apache, HAProxy and keepalived. The provided configuration samples refer to the load balancer machine(s). Here's the logical structure of a load balancer described here:

Apache

Apache is where user requests land. The main functions of Apache in this setup are providing SSL termination, redirection for non-SSL requests (we want users to access everything over SSL), and possibly caching. Conforming requests are sent to HAProxy (see below) for load balancing.

Here is an excerpt from the Apache configuration:

# virtual host for port 80

# mostly just redirections

<VirtualHost *:80>

ServerAdmin admin@example.com

ServerName lb1.example.com

# add ServerAlias as needed

RewriteEngine on

# redirect everything to https, the (.*) has a leading /

RewriteRule ^(.*)$ https://%{HTTP_HOST}$1 [R,L]

ErrorLog ${APACHE_LOG_DIR}/error.log

# Possible values include: debug, info, notice, warn, error, crit,

# alert, emerg.

LogLevel warn

CustomLog ${APACHE_LOG_DIR}/access.log combined

</VirtualHost>

# virtual host for SSL

<IfModule mod_ssl.c>

NameVirtualHost *:443

<VirtualHost *:443>

ServerAdmin admin@example.com

ServerName lb1.example.com

# add ServerAlias as needed

SSLEngine on

SSLProxyEngine on

RewriteEngine On

# SSL cert files

SSLCertificateFile /etc/apache2/ssl/example.com.crt

SSLCertificateKeyFile /etc/apache2/ssl/example.com.key

SSLCertificateChainFile /etc/apache2/ssl/chain.example.com.crt

# Redirect requests not ending in slash eg. /app1 to /app1/

RewriteRule ^/([^/]+)$ https://%{HTTP_HOST}/$1/ [R,L]

# Uncomment this (end enable mod_disk_cache) to enable caching

# CacheEnable disk /

# pass everything to the local haproxy

RewriteRule ^/([^/]+)/(.*)$ http://127.0.0.1:8080/$1/$2 [P]

# The above RewriteRule is equivalent to multiple ProxyPass rules, eg

# ProxyPass /app1/ http://127.0.0.1:8080/app1/

# etc.

# THIS NEEDS A LINE FOR EACH APPLICATION

ProxyPassReverse /app1/ http://127.0.0.1:8080/app1/

ProxyPassReverse /app2/ http://127.0.0.1:8080/app2/

ProxyPassReverse /app3/ http://127.0.0.1:8080/app3/

# add other apps here...

<Proxy http://127.0.0.1:8080/*>

Allow from all

</Proxy>

ErrorLog ${APACHE_LOG_DIR}/error_ssl.log

# Possible values include: debug, info, notice, warn, error, crit,

# alert, emerg.

LogLevel warn

CustomLog ${APACHE_LOG_DIR}/access_ssl.log combined

</VirtualHost>

</IfModule>

So, nothing special here. Users trying to connect over plain HTTP, or not using the trailing slash, are automatically redirected to the right URL. Disk caching can be enabled using mod_cache and mod_disk_cache, and mod_proxy is used to send valid requests to HAProxy. The actual proxying is performed using a rewrite rule with the [P] flag, which is essentially equivalent to using ProxyPass, but has the advantage that the rule can be made generic and what would otherwise require N ProxyPass directives (where N is the number of backend applications) can be done with a single RewriteRule. (ProxyPassMatch could also have been used to achieve a similar result).

Unfortunately, there's no shortcut for the ProxyPassReverse and the other ProxyPassReverse* directives, which means that all the applications have to be explicitly listed there (one directive for each application).

In this scenario sessions are not synchronized between backend servers, so once a new connection has been dispatched to a backend server, it must persist to it until the end (unless the server fails, of course, in which case it will be redispatched to another backend server, and users will have to log in again). This is accomplished by HAProxy through the use of cookies: a cookie is inserted in replies sent back to the client recording the backend that the connection is using. When new requests for the same session come from the client, HAProxy just needs to read the cookie to find out the backend server to use. This cookie is also removed from the request before it's sent to the backend, so the application never sees it.

The Apache server terminates SSL, performs basic checks on the requests, redirects them if necessary, and (mostly) passes the traffic to HAProxy, which is listening on port 8080 (see below).

The second load balancer runs the same configuration (probably with ServerName set to lb2.example.com).

HAProxy

HAProxy analyzes the URLs and paths in the requests it's given to learn which application is being requested, and dispatches them to the right backend. But since backends expect requests relative to /, HAProxy also needs to strip the /appname/ part from the requests before forwarding it to the backends, and readd it to replies on the way back. The application name can appaear in some HTTP header, or in cookies. HAProxy needs to fix it in all these places.

Here is HAProxy's /etc/haproxy/haproxy.cfg:

global

##log 127.0.0.1 local0

##log 127.0.0.1 local1 notice

#log loghost local0 info

maxconn 4096

user haproxy

group haproxy

daemon

node lb1

spread-checks 5 # 5%

# uncomment this to get debug output

#debug

#quiet

# This section is fixed and just sets some default values.

# These values can be overridden by more-specific redefinitions

# later in the config

defaults

log global

mode http

# option httplog

option dontlognull

retries 3

option redispatch

maxconn 2000

contimeout 5000

clitimeout 50000

srvtimeout 50000

# Enable admin/stats interface

# go to http://lb1.example.com:8000/stats to access it

listen admin_stats *:8000

mode http

stats uri /stats

stats refresh 10s

stats realm HAProxy\ Global\ stats

stats auth admin:admin # CHANGE THIS TO A SECURE PASSWORD

# A single frontend section is needed. This listens on 127.0.0.1:8080, and

# receives the requests from Apache.

frontend web

bind 127.0.0.1:8080

mode http

# This determines which application is being requested

# These ACL will match if the path in the request contains the relevant application name

# for example the first ACL (want_app1) will match if the request is for /app1/something/, etc.

acl want_app1 path_dir app1

acl want_app2 path_dir app2

acl want_app3 path_dir app3

# ... add lines for other applications here...

# these ACLs match if at least one server

# for the application is available.

acl app1_avail nbsrv(app1) ge 1

acl app2_avail nbsrv(app2) ge 1

acl app3_avail nbsrv(app3) ge 1

# ... add lines for other applications here...

# Here is where HAProxy decides which backend to use. Conditions

# are ANDed.

# This says: use the backend called "app1" if the request

# contains /app1/ (want_app1) AND the backend is available (app1_avail), etc.

use_backend app1 if want_app1 app1_avail

use_backend app2 if want_app2 app2_avail

use_backend app3 if want_app3 app3_avail

# ... etc

# If we get here, no backend is available for the requested

# application and users will get an error

########## BACKENDS ###################

backend app1

mode http

option httpclose

# The load balancing method to use

balance roundrobin

# insert a cookie to record the real server

cookie SRVID insert indirect nocache

option nolinger

# Here is where requests coming from Apache are rewritten to

# remove the reference to the application name

# The request is something like

# ^GET /app1/something HTTP/1.0$

# but it should be seen by the real server as /something/,

# so remove the application name on requests

reqirep ^([^\ ]*)\ /app1/([^\ ]*)\ (.*)$ \1\ /\2\ \3

# If the response contains a Location: header, reinsert

# the application name in its value

rspirep ^(Location:)\ http://([^/]*)/(.*)$ \1\ http://\2/app1/\3

# Insert application name in the cookie's path

rspirep ^(Set-Cookie:.*\ path=)([^\ ]+)(.*)$ \1/app1\2\3

# This is to perform health checking: just get /

# Adjust as needed by the specific application

# Requests have the User-Agent: HAProxy so they can be excluded from logs

# on the backend

option httpchk GET / HTTP/1.0\r\nUser-Agent:\ HAProxy

# Here is the actual list of local servers for the application

# adjust parameters as needed

server app1_1 192.168.0.46:80 cookie app1_1 check inter 10s rise 2 fall 2

server app1_2 192.168.0.47:80 cookie app1_2 check inter 10s rise 2 fall 2

server app1_3 192.168.0.48:80 cookie app1_3 check inter 10s rise 2 fall 2

# ...add other servers for the appliaction here...

# the following backends follow the same pattern

backend app2

mode http

option httpclose

balance roundrobin

cookie SRVID insert indirect nocache

option nolinger

reqirep ^([^\ ]*)\ /app2/([^\ ]*)\ (.*)$ \1\ /\2\ \3

rspirep ^(Location:)\ http://([^/]*)/(.*)$ \1\ http://\2/app2/\3

rspirep ^(Set-Cookie:.*\ path=)([^\ ]+)(.*)$ \1/app2\2\3

option httpchk GET / HTTP/1.0\r\nUser-Agent:\ HAProxy

server app2_1 192.168.4.14:80 cookie app2_1 check inter 10s rise 2 fall 2

server app2_2 192.168.4.18:80 cookie app2_2 check inter 10s rise 2 fall 2

server app2_3 192.168.4.19:80 cookie app2_3 check inter 10s rise 2 fall 2

backend app3

mode http

option httpclose

balance roundrobin

cookie SRVID insert indirect nocache

option nolinger

reqirep ^([^\ ]*)\ /app3/([^\ ]*)\ (.*)$ \1\ /\2\ \3

rspirep ^(Location:)\ http://([^/]*)/(.*)$ \1\ http://\2/app3/\3

rspirep ^(Set-Cookie:.*\ path=)([^\ ]+)(.*)$ \1/app3\2\3

option httpchk GET / HTTP/1.0\r\nUser-Agent:\ HAProxy

server app3_1 172.17.5.1:80 cookie app3_1 check inter 10s rise 2 fall 2

server app3_2 172.17.5.2:80 cookie app3_2 check inter 10s rise 2 fall 2

server app3_3 172.17.5.3:80 cookie app3_3 check inter 10s rise 2 fall 2

# these are the error pages returned by HAProxy when an error occurs

# customize as needed

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

The second load balancer runs the same configuration (probably using "node lb2" in the config).

Load balancer redundancy

Of course, we don't want to have a single point of failure in the load balancer, so two load balancers are set up with identical configurations, and Keepalived is used to run VRRP between them. VRRP provides a "virtual" IP address which is assigned to the active load balancer, and is where traffic comes in (ie, the address to which the URL used by users resolves). If the active load balancer fails, Keepalived transfers the VIP to the hot standby balancer, which takes over seamlessly. This is possible because the two load balancers need no shared state: all the information needed to dispatch to the backends is contained in the requests coming from users (in the form HAProxy's persistence cookie); both balancers also perform the same health checks, so at any time both know which backends are available to dispatch new requests.

Here is /etc/keepalived/keepalived.conf:

vrrp_script chk_apache {

script "killall -0 apache2" # cheaper than pidof

interval 2 # check every 2 seconds

weight 2 # add 2 points of prio if OK

}

vrrp_instance apache_vip {

# Initial state, MASTER|BACKUP

# As soon as the other machine(s) come up,

# an election will be held and the machine

# with the highest "priority" will become MASTER.

# So the entry here doesn't matter a whole lot.

state BACKUP

# interface to run VRRP

interface eth0

# optional, monitor these as well.

# go to FAULT state if any of these go down.

track_interface {

eth0

eth1

}

track_script {

chk_apache

}

# delay for gratuitous ARP after transition to MASTER

garp_master_delay 1 # secs

# arbitary unique number 0..255

# used to differentiate multiple instances of vrrpd

# running on the same NIC

virtual_router_id 51

# for electing MASTER, highest priority wins.

# THIS IS DIFFERENT ON THE LBs. SET 101 for the MASTER, 100 for the SLAVE.

priority 101

# VRRP Advert interval, secs (use default)

advert_int 1

# This is the floating IP address that will be added or removed to

# the LB's interface when a transition occurs.

virtual_ipaddress {

1.1.1.1/24 dev eth0

}

# VRRP will normally preempt a lower priority

# machine when a higher priority machine comes

# online. "nopreempt" allows the lower priority

# machine to maintain the master role, even when

# a higher priority machine comes back online.

# NOTE: For this to work, the initial state of this

# entry must be BACKUP.

nopreempt

#debug

}

The only difference between the versions of this file installed on the balancers is that one of the balancers (the one that will start as active) must have a lower priority than the other, so VRRP knows to which to assign the VIP.

Final notes

Logging on the backends

On the backends, there are two things to be aware of when configuring logging:

- Normally, health checks performed by the load balancers will be logged;

- All the requests, including user requests, will appear to be coming from the load balancer's IP.

To solve the first problem, we can recognize health check requests by looking at the "user-agent" field, and if it's HAProxy, don't log the request.

For the second problem, we can see what the original IP was by looking at the X-Forwarded-For header that Apache kindly inserts when acting as a reverse proxy.

So putting all together, here's a possible log configuration for a backend using Apache:

BrowserMatch ^HAProxy$ healthcheck

# define a log format that uses the X-Forwarded-For header to log the source of the request

LogFormat "%{X-Forwarded-For}i %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" mycombined

# log only if it's not a health check, and using the mycombined format

CustomLog ${APACHE_LOG_DIR}/access.log mycombined env=!healthcheck

A weird bug

If using a version of HAProxy less than 1.3.23 (which is still the case if using Ubuntu Lucid), there is a nasty bug in the cookie parser that causes HAProxy to not recognize the persistence cookie if it appears after cookies whose name or value contain special characters. When that happens, HAProxy issues a new persistence cookie even if there is a valid one in the request, possibly directing users to another backend server and thus breaking their sessions. This was fixed in HAProxy 1.3.23. Whether the bug triggers or not depends on what the application does with cookies, and also some change in behavior between different browsers has been observed. So, you may or may not hit the bug.

If working with the bugged version and upgrading is not possible (for whatever reason), one way to work around it is to rewrite the Cookie: header received from clients in the Apache frontend, so that HAProxy's cookie always comes first (if it's present, of course).

To use this kludge, mod_headers needs to be enabled.

# Edit Cookie: header so the HAProxy's persistence cookie comes first!

RequestHeader edit Cookie: "^(.*; *)?(SRVID=[^ ;]+) *;? *(.*)$" "$2; $1 $3"

So essentially what this does can be summarized with the following table:

Browser sends HAProxy sees

Cookie: a=b; c=d; e=f Cookie: a=b; c=d; e=f # no change

Cookie: SRVID=app1_2; a=b; c=d Cookie: SRVID=app1_2; a=b; c=d

Cookie: a=b; SRVID=app1_2; c=d Cookie: SRVID=app1_2; a=b; c=d

Cookie: a=b; c=d; SRVID=app1_2 Cookie: SRVID=app1_2; a=b; c=d

The regular expression must also consider that there can be an arbitrary number of spaces between cookies.

After the Cookie: header editing is applied, HAProxy's cookie always comes first, and things sort of work. Obviously, this is a workaround (and a pretty bad one), not a fix. Also, it's likely that there are obscure, or even not-so-obscure, cases where it fails. Alternatives to this kludge, all of them preferrable to the above method, include:

- Modify the applications on the backend servers so that cookie names and values never include the patterns that trigger the bug

- Create your own package for HAProxy 1.3.23

- Switch to a distro which includes HAProxy 1.4.x, or at least a version greater than 1.3.22

It depends on the application

Keep in mind that not every application lends itself well to be easily put behind a reverse proxy. There are applications that generate absolute URLs in the HTML code, just to name an especially bad and common behavior. In those cases, additional work is needed beyond that shown here; it can involve fixing the application (the right thing to do) or adding more kludges to the load balancing (which can be a lot of silly work).